Transparent Texting to Allow Mobile Users to Safely Walk and Text (and More)

Nowadays, we input text not only on stationary devices but also on mobile devices while on the move (which we termed as “nomadic text entry”). One issue with nomadic text entry is that users have to frequently swap their visual focus between the device and their surroundings for navigational purposes. No doing so could be futile, as apparent in this video (many more are just a YouTube search away).

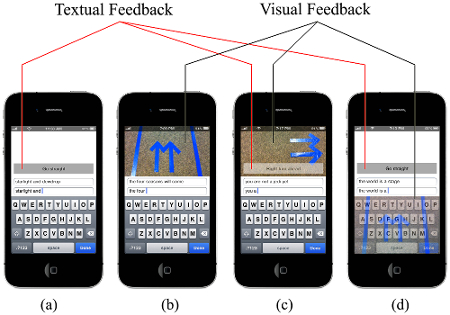

To assist mobile users to walk and text more efficiently and safely, we developed four new feedback systems—textual, visual, textual and visual, and textual and visual via translucent keyboard.

- Textual feedback provides information on the surroundings in written form above the text input field (similar to the turn-by-turn directional information provided by GPS navigation devices). We used a Wizard of Oz method to test this approach, but it is not hard to imagine that in near future most urban objects would be able to talk to each other, thus would be able to inform a mobile user of their presence via his/her mobile device.

- Visual feedback provides feedback with live video. We used the device’s back camera to display a view of the environment behind the device above the text input field.

- Textual and visual feedback is the combination of (1) and (2).

- Textual and visual via translucent keyboard feedback is similar to (3), but instead of using a separate visual feedback area, it uses a translucent virtual keyboard to display the camera view behind the keys. In other words, it provides visual feedback directly on the keyboard.

We hypothesized that providing users with real-time feedback on their surroundings will improve nomadic text entry performance in terms of entry speed and accuracy and also make it safer by reducing the number of collisions with obstacles.

We conducted a user study to test our hypothesis. Results showed that the new feedback systems do improve the overall nomadic text entry performance significantly and reduce the possibility of collisions. See our paper below.

A. S. Arif, B. Iltisberger, W. Stuerzlinger. 2011. Extending mobile user ambient awareness for nomadic text entry. In Proceedings of the 23rd Conference of the Computer-Human Interaction Special Interest Group of Australia on Computer-Human Interaction (OzCHI ’11). ACM, New York, NY, USA, 21-30.

We came up with the idea in 2009, submitted ethics review protocol for the user study in 2010, conducted the study immediately after our protocol got approved, and published the work in 2011. Several developers also developed and published apps that support similar feedback systems around the same time. More interestingly, Apple recently filed a transparent texting patent, which basically the (2) and (4) feedback systems (see the article below). Nevertheless, we are excited by the prospect of seeing this in consumer products soon!

Lomas, N. Apple Files Transparent Texting Patent To Help People Who Walk And Text. TechCrunch (Mar 27, 2014)

This project was funded by NSERC, OGSST, and York University.